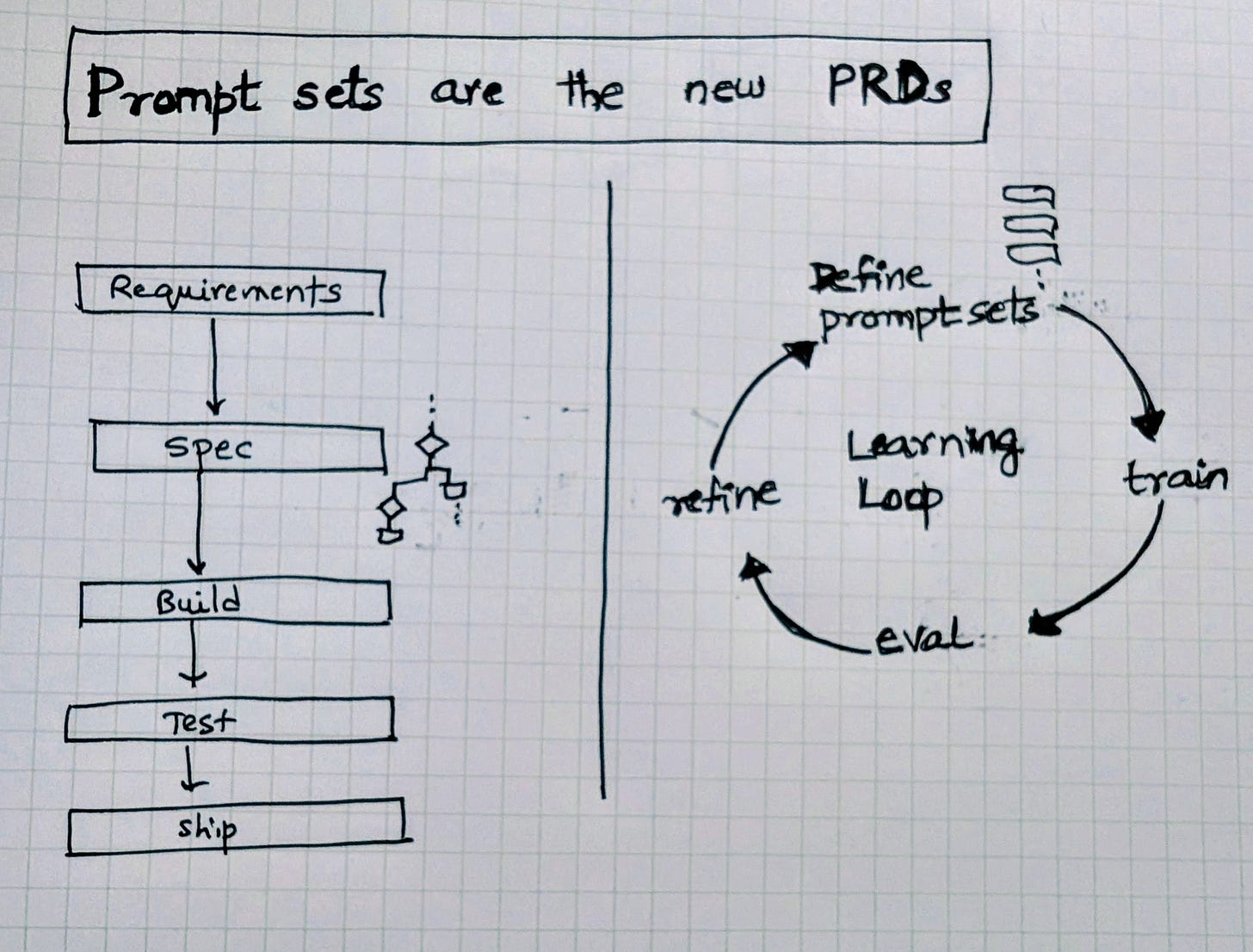

A lot of folks have heard me say (on Lenny’s podcast , for example, that Prompt sets are the new PRDs.

At Microsoft, our teams have been building M365 Copilot and agents that can come to work for you at work: a Researcher, an Analyst, a Project Manager, and many others are in the works. And as these agents take shape, the question I keep returning to is: what does a “spec” really mean in this new world?

Human intent as the spec

“How would you ask your coworker for this?”

This is the..uh..prompt..I posed to folks in a meeting the other day .

I asked this not to arbitrarily anthropomorphize agents but because my intuition is this question forces the right granularity for the agents.

In some sense I see this as a variant of Ilya Sutskever’s observation: “Predicting the next token well means that you understand the underlying reality that led to the creation of that token.”

Next-token prediction worked because the task itself demanded depth: to predict words well, a model had to capture structure, context, and causality.

My hunch is that prompts that reflect human intent set a similar expectation for agents. They push the system toward usefulness rather than rote mechanics.

Good: “Tell me the most important things from the customer meeting. what were the key risks, and how did sentiment trend?”

Bad (too mechanical): “Summarize the transcript in 5 bullets.”

Bad (too tool-specific): “Run sentiment analysis on transcript.json and output JSON.”

Bad (too coarse): “Handle all my email.”

The difference lies in whether the prompt encodes judgment and priorities, the elements a human colleague would naturally understand and more importantly the level at which you would operate at.

Prompt sets as teaching tools

Traditional PRDs were written for programmers. They locked requirements down in advance, then handed them off to be built. Prompt sets work differently. They are living artifacts: part specification, part training data.

Each prompt is an example that shapes how the agent behaves. Together, I almost see them form a curriculum teaching the system what “good” looks like, how to correct mistakes, and where the boundaries are.

A multi-round game

Writing prompt sets is never a one-shot exercise. You start with a few, test them, see where the agent falls short, and refine. Each round closes the gap between what you asked for and what the system delivers.

It feels less like drafting a rigid contract and more like coaching. The spec can continuously evolve, and most importantly, I am finding that you need to put human intuition at the center guiding, adjusting, raising the bar.

That’s why I keep coming back to this idea: Prompt sets are the new PRDs. They encode intent, they teach, and they set the rhythm for iteration.

Good idea, I went a step further and created a GPT plugin that would take in a PRD and convert into a prompt spec. Here's the link, try out and let me know

https://chatgpt.com/g/g-68b1c4e846f88191885be9e950efcca1-prompt-spec-architect

I used the below PRD to test it out. This is a gap in Microsoft teams that could be a great value add that I thought of

Write an example spec based on the above principle for the below PRD

Product Requirements Document (PRD): AI-Powered Meeting Insights with LLM Integration in Microsoft Teams

Overview

Integrate a Large Language Model (LLM) into Microsoft Teams to analyze meeting transcripts, understand intent, and provide:

Alternate dimensions and ideas for discussion topics

Strategic plans and suggestions

Missed points identification with value addition

Relevance scoring for discussed items

Key Features

Meeting Transcript Analysis: Analyze meeting transcripts using an LLM to identify key topics, intent, and context.

Idea Generation: Generate alternate dimensions and ideas for discussion topics.

Strategic Planning: Provide strategic plans and suggestions.

Missed Points Identification: Identify potential missed points and their value addition.

Relevance Scoring: Score discussed items based on relevance to the context.

Functional Requirements

LLM Integration: Integrate a suitable LLM with Microsoft Teams.

Transcript Ingestion: Ingest meeting transcripts.

Contextual Understanding: Analyze meeting context.

Output

Meeting Summary: Summary of key points.

Alternate Ideas: Alternate dimensions and ideas.

Strategic Plans: Strategic plans and suggestions.

Missed Points: Potential missed points with value addition.

Relevance Scorecard: Scorecard for discussed items with relevance scores.

Relevance Scoring

Scoring Algorithm: Develop an algorithm to score relevance (e.g., 1-5).

Factors: Consider factors like context, intent, and impact.

Value Addition for Missed Points

Potential Impact: Estimate potential impact of missed points.

Recommendations: Provide recommendations for incorporating missed points.

Success Metrics

User Adoption Rate: Measure adoption.

Insight Quality: Evaluate relevance and usefulness.

Thanks for sharing this article—I really enjoyed it. It’s made me rethink the way we document and learn as we build. Compared to the traditional approach, I find prompt sets brilliant for encouraging bottoms-up experimentation. But how do we ensure they don’t fragment product thinking? A PRD (hopefully well written!) forced you to connect the dots — between features, edge cases, and the broader narrative. How do we protect that connectivity in this case?